Connecting Your First LLM Provider

Learn to connect your LLM provider API keys in Portal One. Step-by-step guide to add, manage securely, and troubleshoot connections for your AI agents.

Portal One uses a default LLM for all new agents so you can get started quickly, however you can update your AI agents to use any of the 50+ compatible LLMs.

Before You Begin:

- Ensure you have an active Portal One account

- Have an active account with your chosen LLM provider (e.g., OpenAI, Anthropic, Google, etc.).

- Make sure you have already generated an API key from your LLM provider's platform. If you need help finding or generating your key, see the "Finding Your API Key" section below.

Quick Reference

For a fast overview, here are the basic steps:

- Navigate to SETTINGS > API Keys in Portal One.

- In the "LLM Provider API Keys" section, find your provider and click "Add".

- Paste your API key into the "Key value" field and click "Save".

- Verification happens automatically when an agent uses the key. If the agent works, your key is connected!

Finding Your API Key

If you haven't generated your API key yet or need to find it, here are direct links to the API key pages for some popular providers:

- OpenAI: https://platform.openai.com/api-keys

- Anthropic: https://console.anthropic.com/settings/keys

- Google (Vertex AI / AI Studio): Refer to Google Cloud's documentation for creating API keys for Vertex AI services. Example: Google Cloud Client Libraries (Ensure you're looking for service account keys or API keys as appropriate for the models you intend to use).

Always consult your specific LLM provider's official documentation for the most up-to-date instructions.

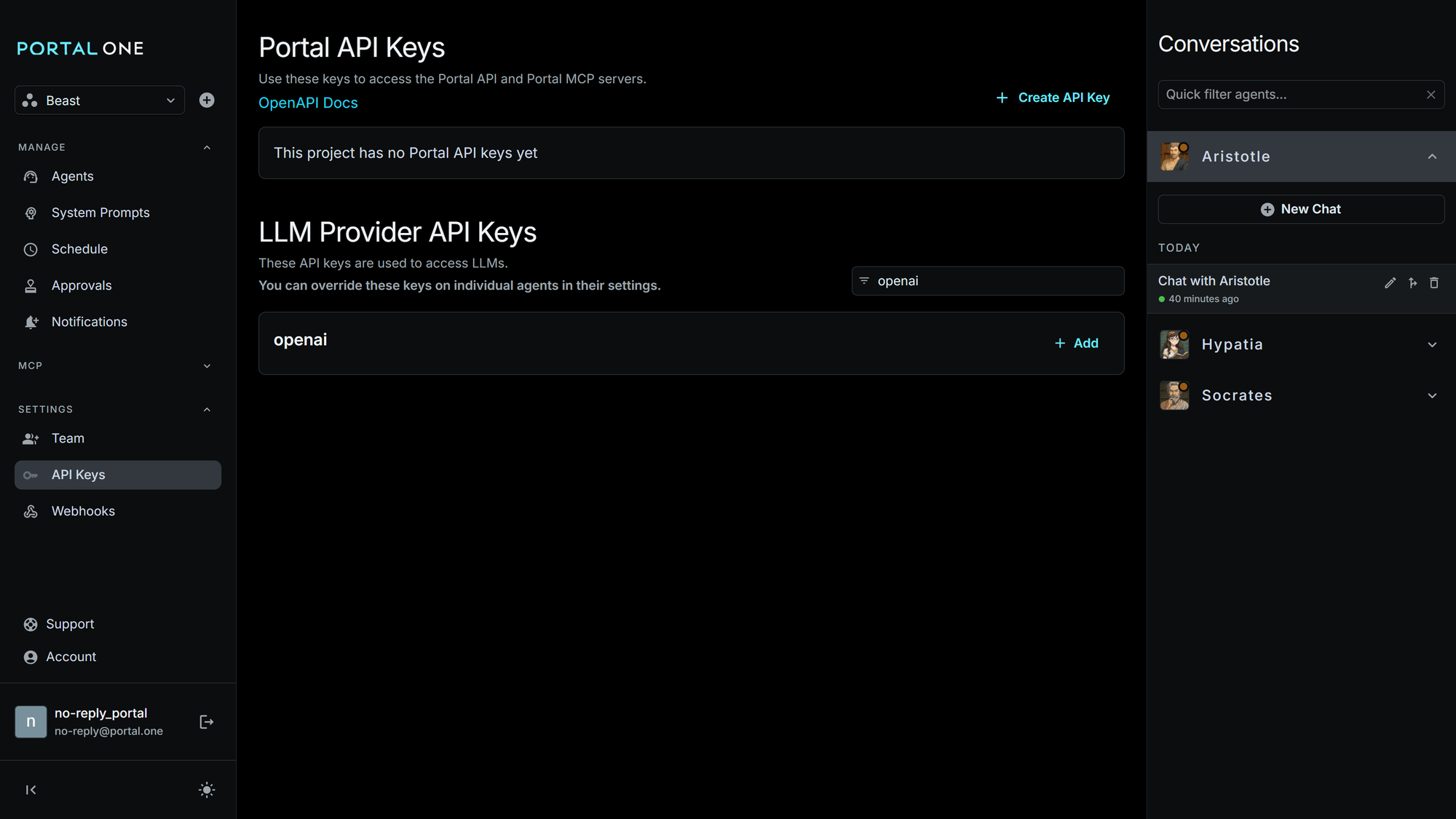

Step 1: Navigate to the API Keys Section in Portal One

You can access the area to manage your LLM provider API keys:

Via the Settings Menu:

- On the main navigation bar within Portal One, click on "SETTINGS". This will expand the settings menu.

- Look for the "API Keys" link, accompanied by a key icon (🔑), and click it.

Step 2: Locate the LLM Provider API Keys Area

On the "API Keys" page, you'll typically see two main sections:

- Portal API Keys: This section is for managing API keys related to Portal One's own API (for more advanced integrations).

- LLM Provider API Keys: This is the section we'll focus on. Here, you'll see a list of supported LLM providers. Each card will show the provider's name (e.g., "OpenAI," "Anthropic") and an "Add" button.

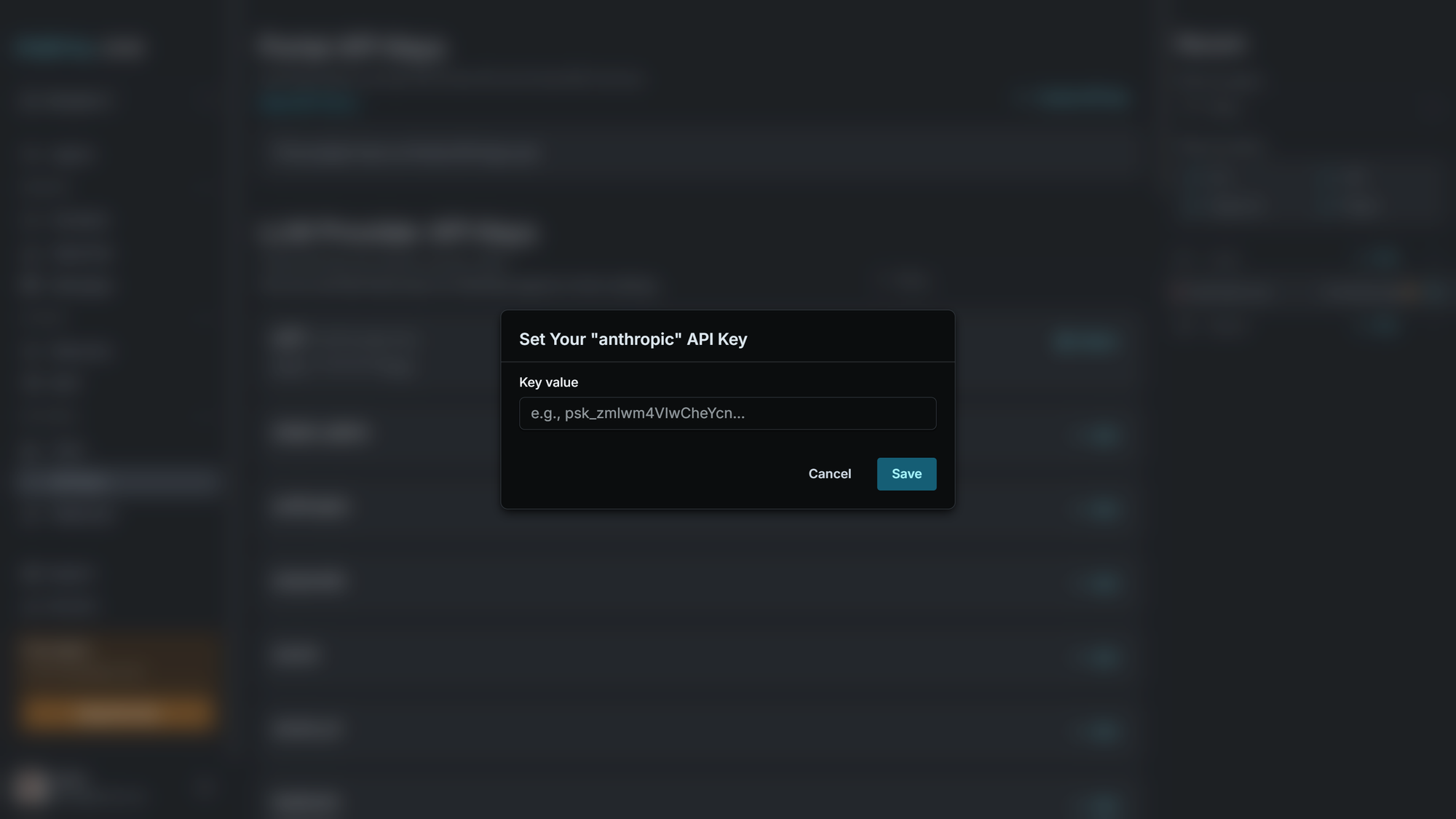

Step 3: Add Your LLM Provider API Key

- Identify your desired LLM provider from the list of cards in the "LLM Provider API Keys" section. Use the filter input to quickly find the provider.

- Click the "Add" button on the card corresponding to that provider.

- Inside the dialog, you will see a field labeled "Key value" (or similar).

- Carefully paste your API key (which you obtained from your LLM provider's platform) into this field.

- Click the "Save" button.

An "Add API Key" dialog (or modal window) will appear. It will typically be titled something like "Set Your '[Provider Name]' API Key".

Upon successful saving, the dialog will close. You should now see your API key partially hidden for security (obfuscated), (e.g., showing only the last few characters like ••••••••••••key123) displayed on the provider's card. This indicates that Portal One has stored your key.

Note: Portal One simplifies this process by focusing on the API key itself. Other configurations, such as selecting specific models (e.g., gpt-4-turbo or claude-3-opus), are handled when you configure individual AI agents, not at this API key connection stage.

Security of Your API Keys (Advanced Note)

Portal One takes the security of your API keys seriously. When you save an LLM provider API key, it is encrypted both at rest (when stored in our systems) and in transit (when communicated between your browser and our servers). This means the key is scrambled and protected from unauthorized access.

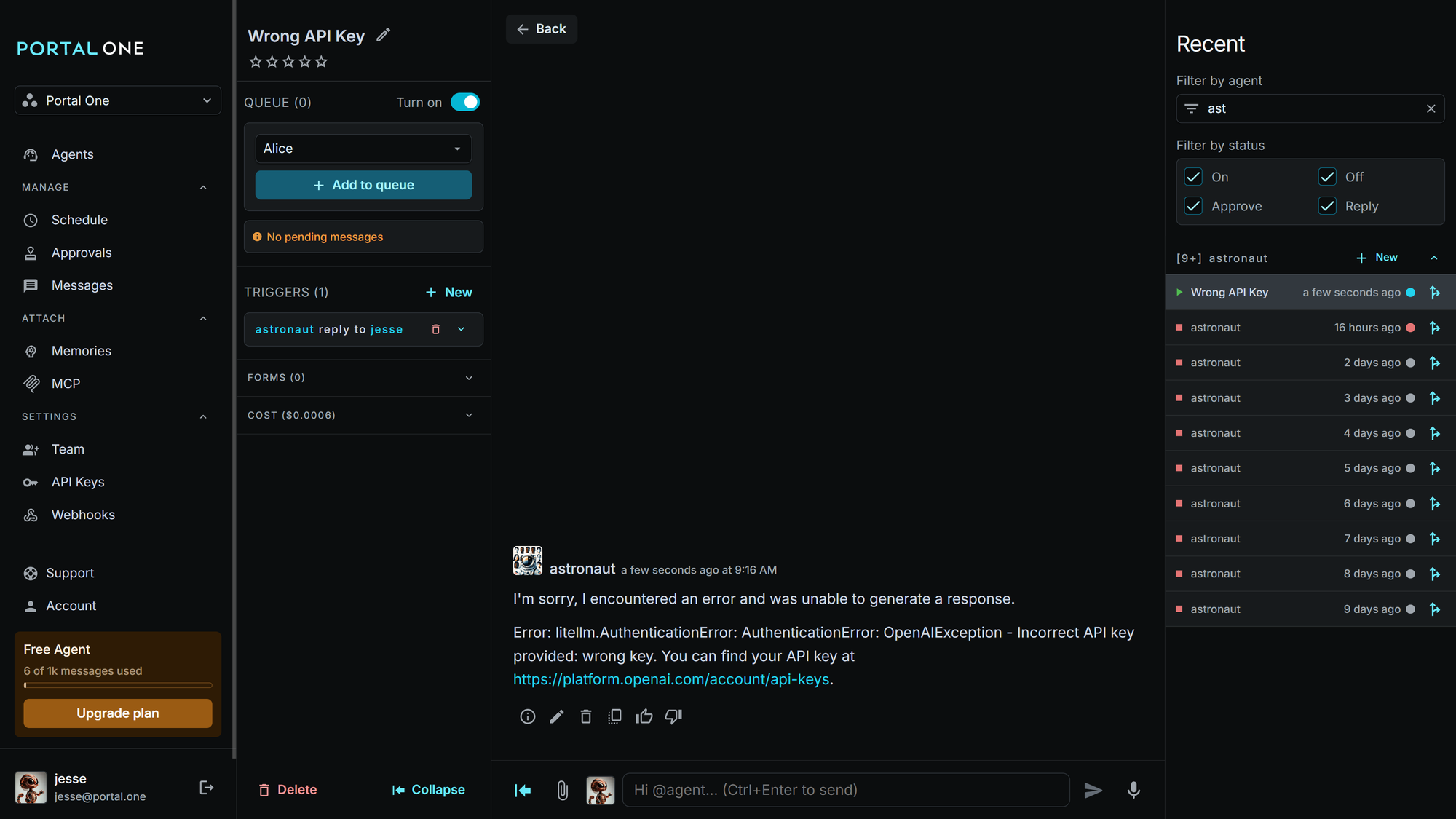

Verifying Your Connection & Troubleshooting

Portal One does not validate your LLM provider API key directly, however you can validate your key by chatting with an agent that uses a model from the LLM provider.

Successful Connection

If your agent successfully generates responses or completes its tasks using the selected LLM provider, your API key is working correctly!

Invalid API Key

The agent will display an error message directly in the chat interface. For example, you might see a message like: "I'm sorry, I encountered an error and was unable to generate a response," often followed by a more specific error detailing the authentication problem.

If your API key is incorrect or invalid:

Troubleshooting Steps:

- Review the Error Message: The error message itself (like the one shown above) often provides clues. For instance, "Incorrect API key provided" clearly indicates an issue with the key itself.

- Double-check the API Key: Ensure the key you pasted into Portal One exactly matches the one provided by your LLM provider. Typos are common!

- Confirm Key Validity: Log in to your LLM provider's platform and verify that the API key is still active, has the necessary permissions (e.g., for the specific models you're trying to use), and hasn't expired or been revoked.

- Correct Provider: Make sure you've added the key to the correct provider card in Portal One (e.g., an OpenAI key to the OpenAI card).

- Re-enter the Key: If problems persist, try deleting the key from Portal One (see "Managing Connected LLM Providers" below) and then carefully re-adding it.

Managing Connected LLM Providers

- Viewing Connections: Your connected LLM providers, along with their partially hidden (obfuscated) API keys, will be listed in the "LLM Provider API Keys" section.

- Updating an API Key: If you need to update an API key (e.g., if it has expired or you've rotated your keys), you'll generally need to:

- Delete the old key: Look for the "Delete" button associated with the specific provider's key you want to change. Click it to remove the existing key.

- Add the new key: Once the old key is removed, the "Add" button for that provider should reappear. Click it and follow Step 3 above to enter your new API key.

Important Considerations for Your API Keys

While Portal One securely stores your keys, remember these best practices:

- Consult Provider Documentation: Always refer to your specific LLM provider's documentation for the most accurate information on generating, managing, and securing your API keys.

- Confidentiality: Treat your API keys like passwords. Do not share them publicly or embed them directly in client-side code.

- Permissions (if applicable): Some LLM providers allow you to set specific permissions or scopes for API keys. Ensure the key you use with Portal One has the necessary permissions to access the models and perform the actions your agents will require.

Next Steps

Congratulations! Once you have successfully connected your first LLM provider, you're ready to empower your AI agents. The connected provider and its associated models will now be available for you to select when you configure an agent.